The recent rollercoaster – many have used stronger words - that schoolchildren faced when they were unable to take their exams this summer has a read-over to our justice sector.

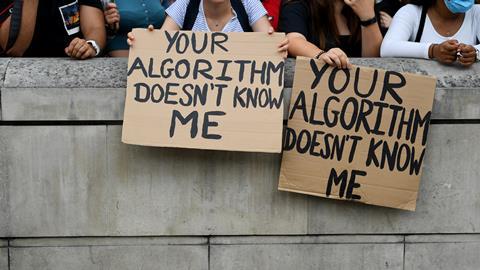

Since they were unable to be judged by exams, pupils undertaking GCSE’s and A-levels were initially judged by an algorithm, which led to weightings in favour of past performance. So, among other anomalies, a bright pupil from a historically poorly-performing school would be downgraded. Some pupils failed exams that they had never had the chance of sitting. The uproar led to the replacement of the algorithm by teacher judgement.

Teacher judgement had its own consequences. There was grade inflation, since teachers presumably took a more benign view of their pupils than impartial examiners would have done. But the interesting consequence is that teacher judgement – in other words, human judgement - has made the fuss go away. There seems in this case a general acceptance that human judgement is better than computer judgement.

There are already algorithms in use in the justice sector, affecting our lives. The Law Society published a well-received report last year highlighting that algorithms are increasingly being used in criminal justice, from identifying criminals to administering justice and rehabilitation – ‘The use of algorithms in the criminal justice system’.

The same kind of outcomes as were seen in the exam debacle were noted in justice algorithms, because in some cases data such as postcodes are used. Where postcode data are used to make judgments, it is obvious that people are no longer being judged on their individual characteristics, but on where they live.

It is not fanciful to think that decisions by algorithm will play an increasing part in all our lives, including in justice. The computerisation of justice continues apace after our collective lockdown experience during the pandemic.

The Law Society boasted last week about the experience of the business and property courts in England Wales during lockdown:

‘Since the lockdown began, 85% of national and international business disputes in the business and property courts are being concluded remotely using technology. Commercial court users have reported almost no backlog of cases and that the courts continue to have similar levels of business to previous years.’

The European Commission has recently announced a consultation on the 'Digitalisation of justice in the EU', directly linked to the pandemic: 'The COVID-19 crisis has highlighted the need to launch and accelerate different actions related to the transformation of justice by employing digital technologies' . The consultation closes on 10 September.

The Commission expressly says that this consultation goes hand in hand with studies it is about to publish on digital criminal justice, and on innovative technologies such as artificial intelligence (AI) and distributed ledger technologies such as blockchain in justice systems.

This article is concerned only with AI, which is where the use of algorithms falls (and is not concerned by areas such as digital case management, document filing or remote hearings).

Algorithms are already widely used by large corporations in the settlement of low value disputes, and with evident consumer satisfaction. Indeed the European Commission has funded a project in the past, CREA, which had as its specific aim the development of algorithms to help with cross-border disputes.

Few people may mind the use of an algorithm to resolve a low value dispute, where it is hardly worth consulting a lawyer and going before a judge. But it would be naïve to think that, once the algorithm has advanced towards greater perfection, it will not be used higher up the dispute resolution food-chain.

When considering these questions, in response to a European Commission consultation earlier this year on AI, the Council of Bars and Law Societies of Europe (CCBE), of which the Law Society remains a member through the UK delegation, insisted that nothing in the application of AI to the justice sector should infringe on the right to a fair trial. In particular, it said that 'a right to a human judge should be guaranteed at any stage of the proceedings'.

And that seems to me the core of it. The exam rollercoaster showed that it is too early to replace human judgement in grading academic achievement where there is no exam. I am not an academic expert, and I suppose the replacement of all current exams with multiple choice questions may remove humans from marking in due course.

But justice is not an exam for which you study, nor for which a multiple choice test is suitable. As the CCBE notes: 'AI systems do not understand the entire context of our complex societies.'

Lawyers and their professional organisations must be involved in the development of justice algorithms, and for the time being, we must insist that, in civil and criminal cases, every individual has the right to a human judge.

Jonathan Goldsmith is Law Society Council member for EU matters and a former secretary general of the Council of Bars and Law Societies of Europe. All views expressed are personal and are not made in his capacity as a Law Society Council member nor on behalf of the Law Society

No comments yet